Thoughts on the Edge of Chaos

M.M.Taylor and R.A. Pigeau

2. Basic Ideas: Information and structure, Attractors and Repellors

3. Basic Ideas: Catastrophe

7. Surprise and importing structure

Surprise: importing structure

A logic map has no divergent states, by definition. The future is entirely predictable from the present state. The converse is not true in general: two states can have trajectories that combine into a single state. The logic system must be dissipative. It is not possible to "predict" the past trajectory of a logic state. If states can combine but not split, the number of attainable states must decrease, or at least not increase, over time. If an outside observer knew the range of the state space (i.e. the set of states the system could have been in) at time t0, he would need a further amount of information H to specify the exact state. As time progressed, this observer would be able to specify the exact state with less information than H, since the number of available states would have decreased. As the states combine, they approach or join the attractors of the map, and if those attractors are fixed or periodic, the system approaches the "death" map. Chaotic (strange) attractors cannot exist in a logic map, since the number of possible states is finite, so that the longest possible period is one in which all states are visited once each. Isolated logic maps are therefore condemned finally to "go round in circles."

To release a dissipative logical system from gradual death requires the importation of structure from outside. The system can no longer be informationally isolated. It must be moved to a state that is not on either its forward (future) or any of its possible backward (past) trajectories, in other words, it must be "surprised" by the importation of new information--new structure.

The structure that is imported must come from some external system, which is most easily imaged as another logical system. One can then depict the original system and the external system together, as one, larger, isolated system. Inasmuch as the newly imported structure is predictable from the external system but not from the original one, the total information required to specify the joint system is not increased by the importation: the imported structure is totally redundant. In the course of being "surprised", the original system has added to its own structure, but the larger world in which it is included has not gained structure; more probably, it has lost total structure.

|

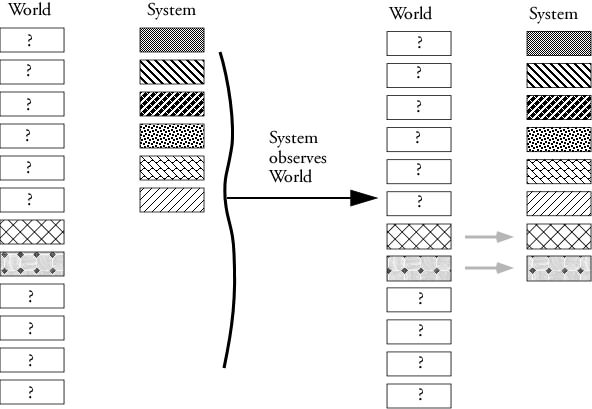

| Figure 11. Importing structure. The left panel shows the state of the system before it observes (and is surprised by) the state of the world. A third-party observer privy to the state of the system and of the world would see 20 different entities with independent states. Supposing each took 10 bits of information to describe it, to describe the system and the world together would take 200 bits. After the system has observed two elements of the world about which it had no prior knowledge except its own imaginings, the world and the system both contain the same information about those aspects. To describe the entire complex would now take only 180 bits. |

There is a close formal analogy between being surprised and the process of eating. The organism imports structured materials which it uses to maintain the structural stability of its own body, and returns less-structured materials to the world through excretion. The overall structure of the world is reduced, though that of the organism is maintained. The importation of surprise will be a fundamental element in our discussion of teaching, training, and learning, where we will deal with concepts of how surprise is imported and incorporated.

Fractal Growth Structures: the Cognitive Snowflake

The "Cognitive Snowflake" is a fundamental concept in our view of cognition. It is a fractal structure that describes the knowledge held by a cognitive system; but more importantly, it describes how that knowledge is interpreted.

The basis for the cognitive snowflake is surprise. Surprise is necessary if a semi-chaotic dissipative system is to avoid death. The system imports structure from outside, but that structure cannot be predictable from the system state. It can connect with any part of the pre-existing system structure.

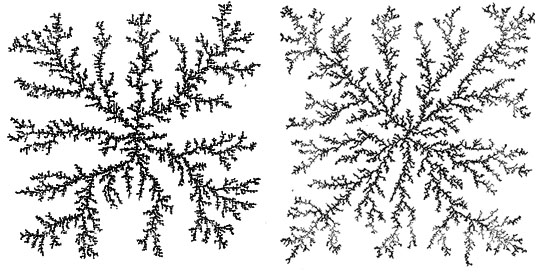

We will demonstrate a trivially simplified analogy to the Cognitive Snowflake. Symbolize the pre-existing structure of the system as a set of dots connected in a space of N dimensions; a surprise consists of adding another dot or structure of dots to some part of the structure. The added dot or structure is asserted to come from an arbitrary point, to move in a random walk, and to stick to the pre-existing structure wherever it first touches. This growth procedure has been well studied under the name "diffusive growth" (e.g. Witten & Cates, 1986, Sander, 1987). The result of such a procedure is a spindly branching structure we call a "fractal snowflake" (Fig. 12)

|

| Fig 12. Two examples of "fractal snowflakes" generated by diffusive growth, using slightly different growth parameters. In each case, a dot entered the space from outside at a random location, and executed a random walk until it touched a dot that was already part of the snowflake, at which point it became a fixed part of the growing snowflake. |

The fractal snowflake has several properties that are not immediately obvious:

- The average density of the snowflake (dots per unit area) decreases without limit as the snowflake grows. The region covered by the snowflake is effectively empty.

- Although the snowflake covers effectively zero area (its dimension being about 1.6 in a 2-dimensional space), yet its outer "twigs" intercept almost all incoming dots. Its inner structure is almost hidden from modification by the addition of new structure: to anticipate the cognitive metaphor, "As the twig is bent, so grows the tree."

- The opacity of the outer twigs of the snowflake holds only in spaces of up to 7 dimensions (Witten & Cates, 1986). In higher dimensionality spaces, the interior of the snowflake may be accessible to incoming dots.

The fractal snowflakes discussed by Whitten and Cates (1986) and by Sander (1987) have as their elements unitary points, which move in some space until they accidentally meet the growing snowflake, and then stick rigidly, or in some cases until they meet one another to form small snowflakes, which may eventually find and bind to the main one. As a first approximation, we identify the growing arms of the snowflake as the structure of understanding that the system has on a particular topic or performance area. Successive branchings represent differentiation of the topic area into discrete categories, which relate to each other as elements of a main topic. As new information comes in (as a surprise), it creates an extension or a branch related to information already known. If it did not, it would merely confirm what was already known, and would not help in maintaining the structure of the system's knowledge.

As a model of the structure of knowledge, this point-element, rigidly connected snowflake is pretty poor. It asserts that knowledge is a strictly tree-structured hierarchy, that there is no forgetting, and that knowledge from one topic area is never related to knowledge from another. All of these limitations will be removed below, after we consider the maintenance of structure in a replicating environment.